How AI will finally eliminate Software Engineering

Everything is about to change even more than you think.

And yes, sooner rather than later, even the most senior engineers will lose their jobs.

Here's how.

Model 1: 19??-2025

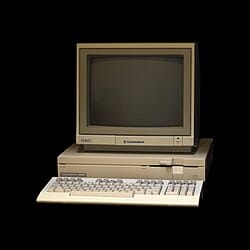

When 2025 started, I built software more or less the same way as I did for the nearly 4 decades since I first wrote a line of BASIC on my Commodore C64. Let's call it Model 1:

- Decide what to build.

- Think about how to build it[footnote]this step was added after a decade or two of learning what happens when you don't[/footnote].

- Write code to build it.

- Figure out why it doesn't work and fix it.

By early 2025, this workflow was augmented by the world's best auto-complete from Cursor, and occasionally copy-pasting code into ChatGPT and/or asking the integrated AI chat in Cursor a question about how to do something. This was Model 1 enhanced, but it was still Model 1.

A few months in, I hesitantly suggested to friends that I expected programming, the way I did it for my whole life, would be on the way out within a quarter, and obsolete by the end of the year.

Then within a week, OpenAI released Codex cloud, Sonnet/Opus 4 came out, and if I recall correctly, Augment launched some kind of cloud agent as well. When I looked at Codex Cloud, I glimpsed the future coming at me like a freight train, and I started thinking maybe my "by the end of the year" timeline was woefully inadequate.

Model 2 came thundering onto the stage, and for any developer who wants to stay productive, it is now the name of the game.

Model 2: 2025..202?

I've been programming for 36 years. I founded several companies, built many products.

— Daniel Tenner (@swombat) June 13, 2025

Claude Code now does 95% of my work and speeds me up by a factor of 2-5x.

If you're an experienced dev and you're not there... you are falling behind.

Here's how I use it, and you can too.…

Model 2 is very well described by Obie in this blog post about what happens when coding becomes the least interesting part of the work. I'd loosely summarise it as:

- Decide what to build.

- Think about how to build it (after a few decades, like Obie, this step is the most important for me now).

- Write clear high level instructions for how to do it.

- Go make a coffee.

- Check the results and, most of the time, ask for a few small tweaks and then commit the changes.

With Opus 4.5 in Claude Code, the hit rate for this process is extraordinarily high. I'd say 90% of the time, step 5 requires no changes or just very minor cosmetic changes. Another 10% of the time, there is a bit of back and forth for a variety of reasons[footnote]Sometimes this is because I didn't specify the requirements well enough, sometimes it's because Claude made a mistake - but with Opus 4.5 this very rarely happens[/footnote].

That means that this workflow is now working for me basically 100% of the time. Since Opus 4.1 launched, I can't remember it not working (though until 4.5, I kept hitting those pesky weekly limits because Anthropic didn't want to let me pay them more than $200/m to get even higher limits).

Moreover, Model 2 supports some really interesting additions, like this approach I've dubbed ClauDHH, which allows me to summon a better programmer than me to assist in the thinking. Whenever building anything more complicated, I use this /architecture command to turn the requirements into a more detailed spec, which gets simplified by ClauDHH into something that isn't the giant pile of spaghetti Claude has a tendency to go for when left unchecked. It works remarkably well and I find myself learning Rails tricks I didn't know about, while reviewing the specs.

In his article, Obie asserts that Model 2 is the foreseeable future, because the judgement that I bring in thinking about the work up-front, and in guiding Claude in its work is essential and non-replaceable:

That judgment is the job of a senior engineer. As far as I can tell, nobody is replacing that job with a coding agent anytime soon. Or if they are, they’re not talking about it publicly. I think it’s the former, and one of the main reasons is that there is probably not that much spelled-out practical knowledge about how to do the job of a senior engineer in frontier models’ base knowledge, the stuff that they get from their primary training by ingesting the whole internet.

Part of Obie's argument is that this stuff is invisible, not part of the training set, and so won't be replaced so quickly:

Teams rarely standardize or document senior thinking because 1) it feels hard to pin down and 2) it’s practically boundless. If anything, it’s easier to talk about patterns and frameworks than about instinct and timing, but the implementation of those patterns and understanding of the chosen frameworks are considered to be part of the job. (As I write this it occurs to me that it is the single biggest reason that bad code exists.)

This is true for now. And I agree with Obie that as long as LLMs can't do this, they will fail to completely replace "senior thinking", and so Model 2 will remain dominant.

Model 2 requires a "senior" software engineer to be in charge of the system, to avoid it going off course, so as long as that's the dominant model, senior software engineers who are able to roll with the times and not resist new technologies are safe (if anything, they're likely to have even more work). People who enjoy the coding more than the "senior thinking", though, are in for a rude awakening, as their work will likely be as much in demand as underwater handbasket weaving.

once senior engineers do start figuring it out en masse, and in particular, once their employers figure it out, it’s going to be a bloodbath out there. Even the most productive juniors and mids are going to start feeling like net negatives.

Obie ends the blog post there, at this apocalyptic vision, which will continue to unfold over the next year...

But what about Model 3?

Model 3: 202?-202?

First let me define what Model 3 is and what could make it work.

Model 3 is when you don't need the "senior" at all anymore.

There are two ways to get there, imho. Which way ends up happening, I don't know.

Model 3a: Too cheap to bother

One path to Model 3 is that generating applications that do X is so cheap and easy that basically it's not worth them having the internal coherence to be worth the "senior thinking". You want to manage your clients? You tell the AI what you want. It builds some software. It shows you what you want well enough that you can get the result you want. You click close. The software disappears. The next time you make the same request, the software is created again, etc.

In such a context, for the majority of software, senior software engineers aren't required because the software just isn't worth making "robust".

Even then there will still be some jobs for "seniors", because there will still be a need for some kinds of persistent, infrastructure-type projects to happen, and they will need to be more robust. In this future, though, "app builders" will be gone, and every human who builds any software will basically be building APIs, either in the form of critical support software like database backends, or designing and exposing APIs for AI app-builders to connect to.

My expectation is that this will happen maybe next year (2026), probably the year after (2027), and almost certainly before 2028 for most apps.

Model 3b: Smarter models

But I think there is also the other option of the models actually matching "Senior thinking". I don't believe that AI will always be unable to do this. It will be very hard, and maybe by the time Senior software engineers are out of a job, almost everyone will be out of a job. So Model 3b will probably take longer than 3a.

In order for Model 3b to come into being, we will need the following 4 superpowers:

- The AI will need to be able to hold the shape of the entire codebase in its mind, along with the ability to zoom around to check its thinking with practical edge cases.

- The AI will need to be able to hold a predicted future state of the entire codebase in its mind, again with that zooming ability.

- The AI will need to be able to hold the shape of the business as it currently is in its mind, to determine how changes to the architecture might impact the business, or vice versa.

- The AI will also need to hold some potential future models of the business in mind, again to determine how architecture decisions might impact this or be impacted.

This is a lot of stuff for a human to do, and most "senior software engineers" don't actually do that, at least until they progress to CTO level, but the best ones do, and I suspect that Model 2 AI will push the competition up and force software engineers to up-skill in that direction, so by the time Model 3b becomes possible, this will be the bar it has to overtake.

There are at least two critical capabilities needed for Model 3b to become viable. I don't know when they will become available. Could be next year - in which case we might even skip right past 3a and the bloodbath Obie mentioned will be even more brutal. But probably not. I think they are big structural shifts and I think we have at least until 2027[footnote]But then, this is AI, and sometimes it moves frighteningly quickly, and I recall that just earlier this year, I predicted a certain shift would happen within 3 months, and it unfolded over the following week...[/footnote].

On the way to Model 3b: Parallel Thinking

The first capability is to be able to hold the overall shape of something in mind, and switch between zoom levels with fluidity and ease. This is something most humans, in my experience, fail at, which is why not everyone can be a successful entrepreneur, senior developer, or artist. It requires a hell of an ability to zoom between the broadest themes and the tiniest details within a single thought, and most people lack this flexibility of mind, as do LLMs, for now, locked in their single-threaded patterns of thought as they are.

Which leads me to think that the first breakthrough needed for LLMs to be able to achieve this is to break out of their current linear forms of thinking, into the ability to hold multiple streams of thought simultaneously while allowing them to interrelate.

I'm absolutely certain there are teams working on this at Anthropic, OpenAI and Google right now. If not, there will be soon. Once they crack this nut, one of the gates to Model 3b is open.

This will also enable the models to work out the interactions between the software architecture and the business, thus getting us a long way towards Model 3b, but not quite all the way.

On the way to Model 3b: Grand Hypotheticals

The second critical capability is to be able to construct, filter and hold multiple hypothetical futures in mind, and evaluate them against each other.

We don't really understand how LLMs think, and it is possible that they already do this to some extent. But our human ability (displayed by at least some small subset of humans) to envision potential futures in excruciating details, and work out their inconsistencies, and fix them, and then relate them back to reality, and continuously jump back and forth between the Now and the Multiple Futures, may well be its own capability, separate and distinct from the "parallel thinking" capability described earlier.

I don't know if any model labs are working on this. I doubt I'm the first to think of it, but it might be that before this becomes possible in a useful way, parallel thinking must be cracked. If so, we won't be able to see progress on this second gate before the first gate opens.

And at the same time I'm pretty sure that this gate too will open eventually. If I, a relatively insignificant technologist writing a blog post, am able to see this possibility already, others are surely already dreaming of how to build it, and it's only a matter of time before we figure out a way.

My guess (I like to make it clear so I can see if I failed) is that this will happen before 2030.

The real bath of blood, coming by 2030?

The truth is, once AI finally can implement Model 3b, software engineering will be the least of our worries, at least as far as job losses go.

Because when Model 3b becomes a thing, when AI becomes capable of working in parallel at multiple zoom levels, and inter-relating those streams of thought, and constructing hypothetical futures and inter-relating those with each other and with the present picture... well, there is no job on Earth that will be safe from that.

By then, I hope we will have figured out a better way that "work to earn money" to distribute the enormous wealth we will have created along the way. And I hope we will have figured out a way for people to derive a sense of meaning in their life that doesn't involve "I put bread on the table", because if we fail to do this, then we'll probably have some kind of war and a lot of people will die.

But hey, at least software will be really really cheap!